Attribute MSA with JMP

Use JMP to Implement an Attribute MSA

This article discusses using an Attribute MSA with JMP. It's important to know because whenever something is measured repeatedly or by different people or processes, the results of the measurements will vary. Variation comes from two primary sources:

- Differences between the parts being measured

- The measurement system

We can use a gage R&R to conduct a measurement system analysis (MSA) to determine what portion of the variability comes from the parts and what portion comes from the measurement system. There are key study results that help us determine the components of variation within our measurement system.

Data File: "AttributeMSA.jmp"

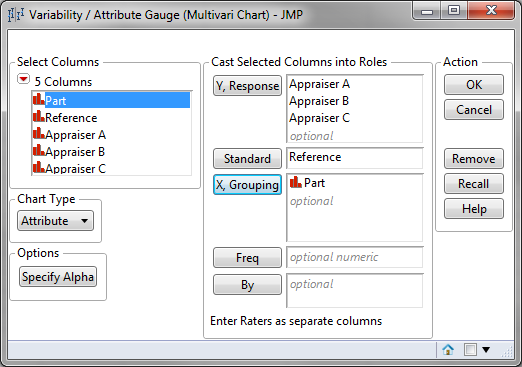

Run an Attribute MSA with JMP:

- Click Analyze -> Quality & Process ->Variability/Attribute Gauge Chart

- Select "Appraiser A", "Appraiser B" and "Appraiser C" as "Y, Response"

- Select "Part" as "X,Grouping"

- Select "Reference" as "Standard"

- Select "Attribute" as the "Chart Type"

- Click "OK"

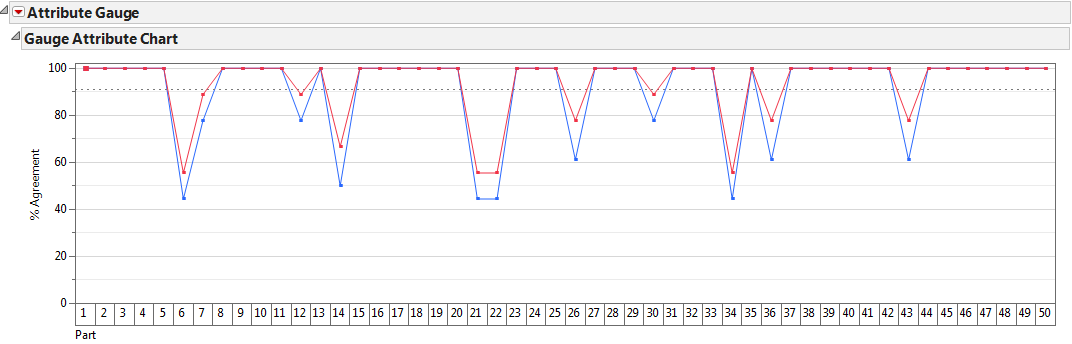

- Click on the red triangle button next to "Attribute Gauge"

- Click "Show Effectiveness Points"

- Click "Connect Effectiveness Points"

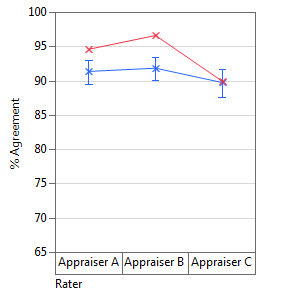

Percentage of agreement by appraiser

-

- Red line: the percentage of agreement with the reference level

-

- Blue line: the percentage of agreement between and within the appraisers

-

- When both lines are at 100% level across parts and appraisers, the measurement system is perfect

- When both lines are at 100% level across parts and appraisers, the measurement system is perfect

-

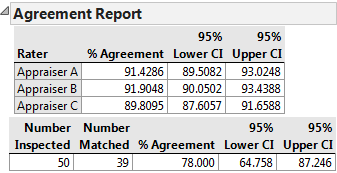

- % Agreement: Overall agreement percentage of both within and between appraisers. It reflects how precise the measurement system performs

-

- In this example, 78% of items inspected have the same measurement across different appraisers and also within each individual appraiser

-

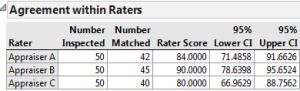

- Rater Score: the agreement percentage within each individual appraiser

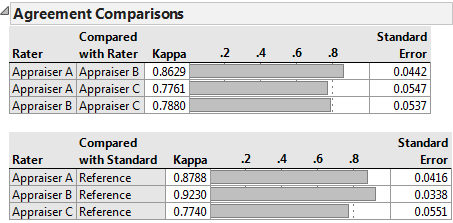

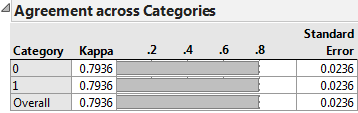

The Kappa statistic is a coefficient indicating the agreement percentage above the expected agreement by chance. Kappa ranges from −1 (perfect disagreement) to 1 (perfect agreement). When the observed agreement is less than the chance agreement, Kappa is negative. When the observed agreement is greater than the chance agreement, Kappa is positive. Rule of thumb: If Kappa is greater than 0.7, the measurement system is acceptable. If Kappa is greater than 0.9, the measurement system is excellent.

The first table shows the Kappa statistic of the agreement between appraisers. The second table shows the Kappa statistic of the agreement between individual appraisers and the standard. The bottom table shows the categorical kappa statistic to indicate which category in the measurement has the best and/or worst results.

This can be considered an acceptable measurement system